Large language models can do a lot out of the box, but on their own they are still sealed systems. You can chat with them, generate text, even get code snippets, yet they don’t naturally know your company’s data, systems, or workflows. That’s why the industry started looking for ways to extend LLMs with integrations.

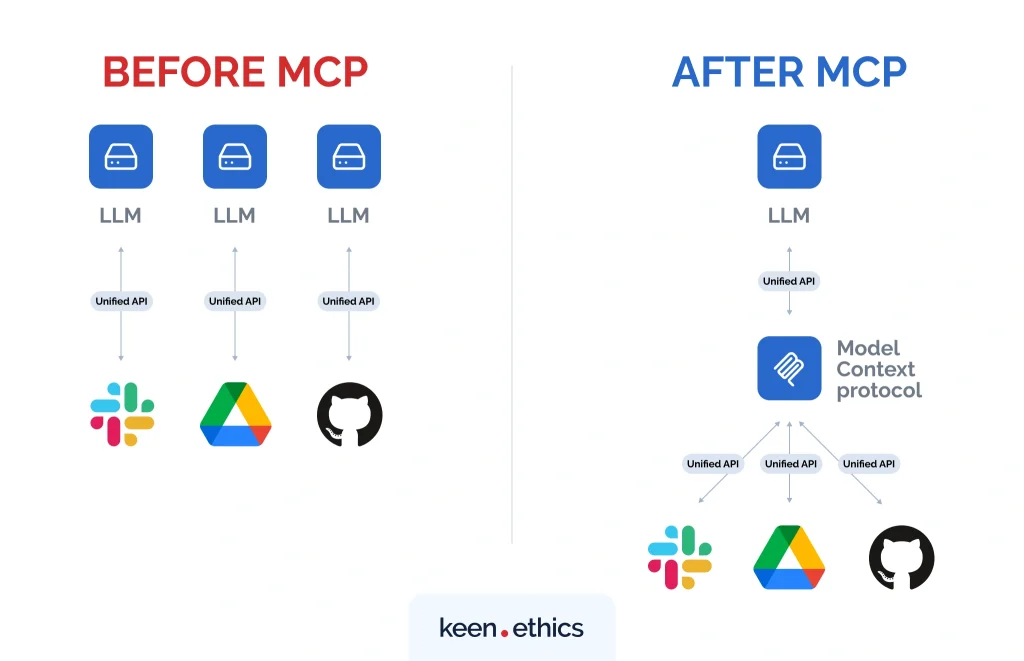

Early attempts solved the problem in fragments: one provider had its own function-calling format, another built a plugin store, and developers often had to rewrite the same logic multiple times. What worked in ChatGPT often failed in Claude, and companies had to juggle integrations, security checks, and hidden vendor lock-in.

The Model Context Protocol (MCP) is the reset button. Often described as the “USB-C for AI,” it creates a common standard that lets clients and models call tools and share context in the same way. Developers can connect corporate data sources, enforce permissions, and run actions across different LLMs without rewriting the stack.

That’s the reason why MCP is gaining attention: it makes AI integrations simpler, safer, and more scalable.

What MCP is and why it matters

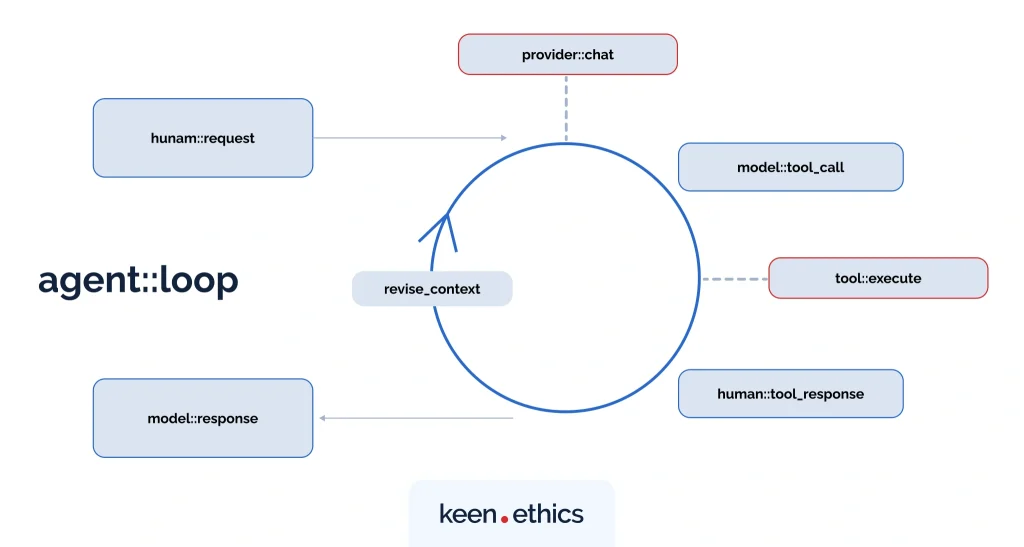

Model Context Protocol is a standard way for language models and clients to interact with tools and external data sources. Before it, integrations were fragmented: each provider built its own function-calling system, which meant an action written for one model often had to be rewritten from scratch for another. This slowed development, increased security risks, and locked teams into specific vendors.

Released by Anthropic as an open-source protocol, MCP removes those barriers. It defines a shared contract using JSON-RPC for exchanging context, performing actions, and returning results with Large Language Models.

As our internal developer observed, the shift is significant for two main reasons:

→ Portability across platforms. With MCP, an integration built once can run across multiple providers without forcing developers to rewrite the logic for each environment. This breaks down vendor lock-in and simplifies long-term maintenance.

→ Transparent control. MCP makes permissions, data flows, and policies explicit. Companies can decide what tools the model can access, and under what conditions, be it querying a database, pulling corporate knowledge, or executing an action.

For companies building with MCP, the advantages boil down to reusable integrations, safer execution, and smoother scaling. And if you’re new to the concept and excited about its possibilities, the next step is to look closer at MCP in real life.

Business cases of using MCP

It’s good to know that there are key players driving the rise of MCP. But the true measure of this concept’s value lies in how it’s being used today. Early adopters are already showing what’s possible when models interact with tools and data through a shared protocol. And that’s exactly what we’ll explore ahead.

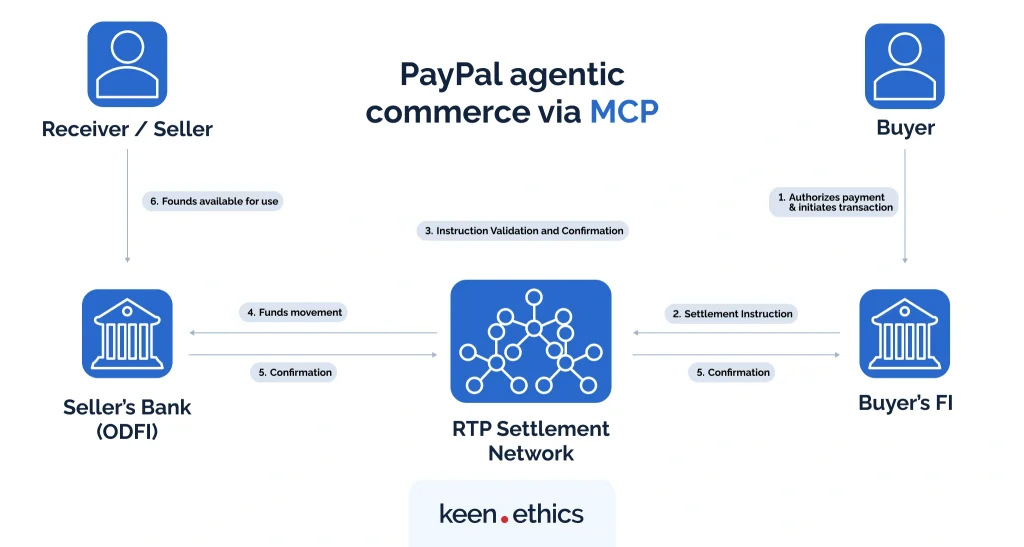

PayPal agentic commerce via MCP

PayPal has enabled an MCP server that exposes payment features to AI agents, allowing developers to build agentic experiences securely. Today, merchants can use language models to create invoices, generate payment links, and manage transactions through tools like create_invoice, pay_order, and create_refund. Each action is wrapped in an OAuth-governed MCP layer, which means the model only receives the exact scope it needs.

They’ve also published a QuickStart guide illustrating how to run the MCP server locally for rapid prototyping and later deploy it in production, with auth integration and cloud hosting.

Replit live coding with MCP agents

The developer ecosystem has been one of the fastest to embrace MCP. Replit, for example, has adopted this protocol to give its AI assistants direct access to developer workspaces. Their documentation shows how an MCP server lets models open files, run commands, and interact with projects in real time.

In practice, developers can remix an MCP-equipped Replit template and ask an AI agent to “summarize a YouTube video and write the output to a file,” with a single JSON-RPC call. This means agents are no longer limited to abstract reasoning inside a chat box. They can actually “live” inside the development environment, accessing the same tools programmers use.

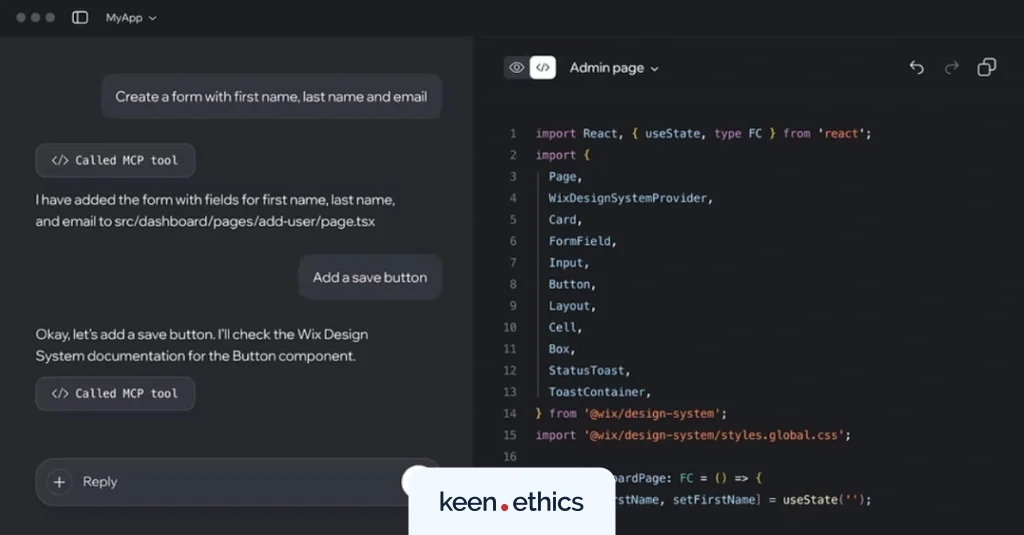

Wix conversational website management

Wix is using MCP to reimagine what a website builder can be. By exposing site-management tools through an MCP server, it allows AI assistants to make direct changes to a live site.

Instead of navigating menus, a small business owner can simply say: “Add a product gallery to my homepage” or “Update the event page with new details.” The model then routes that request via JSON-RPC through the MCP layer, which ensures permissions are respected and only approved actions are executed. This transforms Wix into a platform where agents can operate similarly to no-code users.

Figma design-to-code with precision

Figma’s new MCP integration, available in Dev Mode beta, gives AI tools like Cursor, Claude Code, and Copilot access to real design data. Through its MCP server, the assistant can fetch structured metadata, including layer names, component hierarchy, bounding boxes, and style properties, directly from design files. That’s a leap forward compared to relying only on static screenshots or copy-pasted assets.

For engineers, this means the model can work with the same structured information that a front-end developer sees when inspecting a Figma file. The MCP server runs locally inside Figma’s desktop app and exposes a JSON-RPC endpoint, so connected AI tools can request exactly the data they need.

Block internal tool for enterprise productivity

Inside Block (the company behind Square and Cash App), MCP has already proven itself at scale. Their internal agent, Goose, is built on MCP and used daily by thousands of employees. Goose can connect to more than 60 different MCP servers, from internal APIs to corporate knowledge bases, helping teams automate routine tasks and pull live data into their workflows.

According to Block’s own engineering team, these integrations cut 50–75% of the time spent on repetitive operational work. They also emphasize the importance of designing servers with careful permission scopes and authentication.

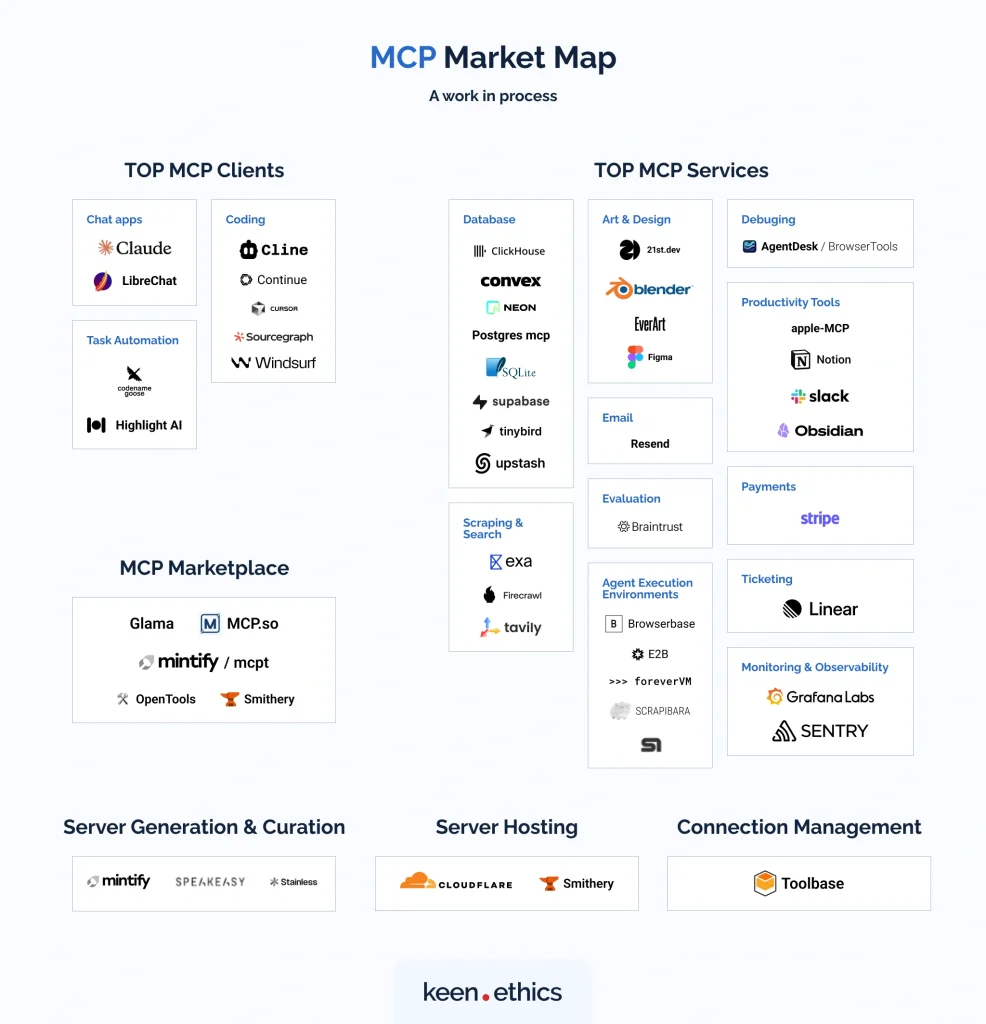

How MCP fits into today’s AI ecosystem

MCP mechanics have quickly made their way into production systems. Within months of its release, major AI players began adopting the protocol, turning it into a cross-industry standard. Here’s how things unfolded:

November 2024. Anthropic open-sources MCP, enabling Claude to connect to local and cloud tools.

March 2025. OpenAI officially adopts MCP across Agents SDK, ChatGPT Desktop, and the Responses API.

April 2025. Google DeepMind confirms MCP support in upcoming Gemini models and related infrastructure.

April 2025. Cloudflare adds MCP support to its Workers runtime.

May 2025. Microsoft releases native MCP support in Copilot Studio.

As you can see, this rapid sequence of announcements quickly pushed MCP into the spotlight. Behind this rise are a few key players, each taking a slightly different path to adoption. Let’s look at how they shaped the protocol’s growth.

→ Anthropic

MCP was introduced by Anthropic as an open protocol in 2024. Claude Desktop was the first flagship client to ship with native support that later extended to the web. This mattered because Claude instantly became a testbed for MCP in real workflows, and developers could attach a local server to query files, connect a remote API, or even spin up hybrid setups.

→ OpenAI

OpenAI’s pivot to MCP was a turning point. Until then, their function-calling system was tightly coupled to ChatGPT, creating friction for teams trying to reuse integrations elsewhere. By adopting MCP in the Agents SDK, ChatGPT Desktop, and the Responses API, OpenAI effectively legitimized it as a cross-vendor standard.

Now, a developer who defines a tool once can see it work across Claude, ChatGPT, and any other MCP-aware client. As our developer pointed out, this portability solves a long-standing pain point — what used to be duplicated integrations can now run consistently across providers.

→ Google DeepMind

In a post on X, Google DeepMind CEO announced the decision to add MCP support to the Gemini models. This solution was both strategic and symbolic. Symbolic, because it positioned MCP as an “open standard for the agentic era,” endorsed by one of the leading research houses in AI. Strategic, because it plugged MCP into Gemini’s SDK and agent infrastructure, signaling that future autonomous agents built on Gemini would speak the same language as Claude and ChatGPT.

For enterprise teams betting on multi-agent orchestration or RAG pipelines, this means MCP is likely to be the connective tissue between otherwise siloed ecosystems.

→ Cloudflare

At the infrastructure level, Cloudflare has become an important enabler. Adding MCP to Workers allowed developers to deploy servers globally with built-in authentication, DDoS protection, and rate-limiting. That matters because one of MCP’s challenges, especially with SSE and streamable HTTP, is scaling connections reliably under load.

With Workers, a server built locally can be pushed to Cloudflare’s edge and instantly become available worldwide, resilient, and secure.

Cloudflare also released scaffolding tools that help developers spin up MCP servers quickly, lowering the entry barrier for experimentation.

→ Microsoft

Microsoft is exploring MCP as part of its strategy to integrate AI into enterprise ecosystems. MCP fits naturally with Semantic Kernel and plugin frameworks, allowing cross-platform LLM agents to tap into corporate data and services. While their rollout is still in early stages, MCP will become a bridge between Microsoft’s enterprise stack and the wider AI ecosystem.

The implications of MCP are clear. One of the most important shifts lies in compliance and auditing. Because every request, response, and permission in MCP is explicit, organizations can trace exactly what an AI system did, what data it accessed, and under which policy. For industries like finance, healthcare, or government, this level of accountability is mandatory, and MCP meets that need.

On the practical side, MCP shortens the path from prototype to production. A tool that begins as a local MCP server for testing can later be deployed globally through environments like Cloudflare Workers or Microsoft Copilot Studio, with authentication and scaling already handled. Add to that the fact that industry leaders are aligning around MCP, and the risk of lock-in drops significantly.

Once you are familiar with the capabilities of MCP and are eager to explore this protocol from the technical side, our next article can help you with this.

Is MCP becoming the TCP/IP of the agentic era?

The history of computing is full of moments when progress was blocked by fragmentation. In the early internet, every machine spoke its own language, and connecting them meant building custom bridges for each pair. That bottleneck disappeared once the Transmission Control Protocol/Internet Protocol (TCP/IP) emerged as a single layer for any device.

AI is now facing a similar moment. LLMs have become incredibly capable, but every provider has built its own way of connecting to tools and data. Teams were stuck rewriting the same logic over and over, multiplying effort and increasing risk. MCP breaks that cycle. It defines a common JSON-RPC contract for exchanging context and calling tools, giving models and clients a shared language.

And while other agent protocols are emerging, like A2A and ACP, MCP already owns the part that determines the way agents fetch context, run tools, and return results. A recent paper in the European Journal of Computer Science and Information Technology notes how MCP can also manage real-time observability streams, enforce rate limits, and solve token-window challenges with context caching strategies.

Put together, these moves indicate that MCP is becoming a standard. If platforms like Meta, Amazon, or Apple decide to join, the protocol could solidify as the universal language of AI actions. Until then, what’s undeniable is that MCP has already crossed the line from experiment to infrastructure.

A glimpse into the future of AI-driven interfaces

As soon as MCP was released, the community started asking a bigger question: if agents can call tools through a shared protocol, why stop at APIs? What if the protocol also defined how users interact with agents directly? That’s the idea behind MCP-UI, a new extension that treats user interfaces as part of the protocol itself.

Rather than clicking through a website or navigating a complex dashboard, a user could open an AI client like Claude or Cursor and simply say what they want. The client then negotiates with an MCP server, which returns data and renders UI components as structured events.

In terms of business, this could shift how digital experiences are built and delivered. A company wouldn’t need to maintain a separate web front-end for every use case. This way, the AI itself becomes the interface. A banking agent could generate a secure transaction form when asked to transfer money, or a design agent connected via Figma’s MCP server could render live component previews inside the chat window.

The long-term implication is that MCP-UI could evolve into a new interaction layer for the web, where AI agents provide standardized UIs in place of static websites. It’s an early experiment, but one that suggests a future where working with software feels more like having a continuous dialogue.

What comes next

MCP is in its early days, yet the pace of progress has been remarkable. What began as a fresh idea for a unifying model–tool interaction is already shaping up into a standard, refined almost in real time. Many technical details remain under active exploration, and in parallel, other alternatives to LLM tooling are also gaining traction.

For teams watching the space, that uncertainty is inviting. There is still plenty to build, test, and debate, and the next stage of AI tooling is only beginning.

Contact us to find out more about our software development services!