Large language models are great at generating answers, but users today expect more. They want data that’s visual, interactive, and immediately useful. To meet that demand, developers turn to the Model Context Protocol (MCP) framework.

Take a crypto exchange support chat as an example. A user asks about Bitcoin, and instead of just receiving a textual explanation, the assistant responds with a chart of the coin’s price history. The chart is rendered in the chat window itself, interactive and always up to date, while the assistant’s reply adds context and analysis.

That’s MCP in action. And this is only the beginning. In this article, we’ll explore how MCP makes these experiences possible, the different approaches to rendering UI, and what developers should keep in mind when building tools that go beyond text.

How MCP defines what users see in the chat

When we opened this article by showing a Bitcoin chart appearing inside a chat, we were pointing to something many LLM integrations struggle to support. Language models can generate text beautifully, but launching a fully interactive UI inside messages is a different challenge for developers altogether.

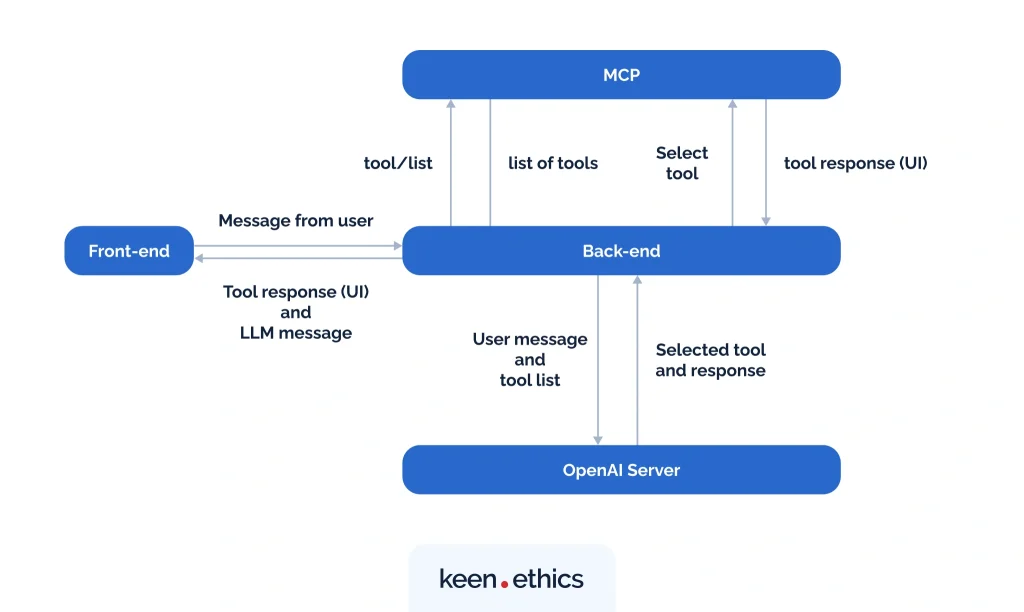

Without special tools, an LLM might call an API and return JSON, leaving you to parse and render it manually. With special tools, the model signals which tool to use, and the tool itself defines how the result should appear, whether as a chart, a widget, or a full page embedded in the conversation.

In practice, that means a chart drawn on the frontend with Assistant UI, or a complete component streamed through the backend with MCP-UI. From this point on, we’ll look at both paths.

Assistant UI for building tools

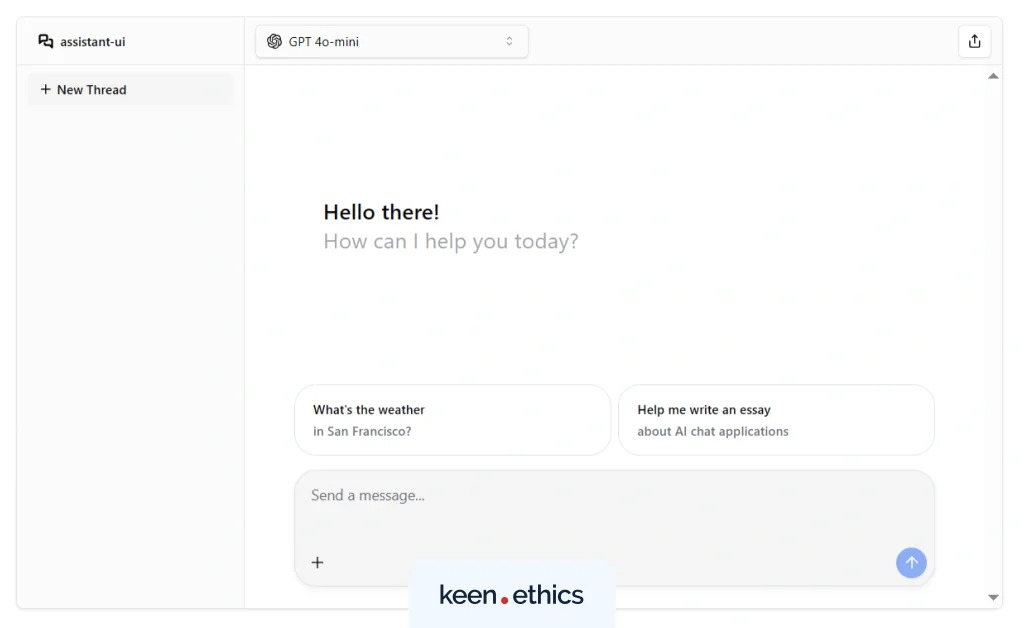

Assistant UI is a framework built on Next.js that equips developers with a complete set of React components for building chat interfaces connected to LLMs. More importantly, it allows you to define MCP tools directly on the frontend and decide exactly how their output should be rendered inside the conversation.

“Instead of being limited to text responses, your assistant can return interactive UI elements directly in the chat flow.” — Alexander Shcherbatov, Keenethics developer

A simple weather tool is the best way to see this in action. Let’s break down how it might work:

1. Set up the project

Start with setting up a simple `assistant-ui` app by running a command: `npx assistant-ui init`.

2. Configure the backend and tools

The pulled scaffold contains a Next.js full-stack app with a single endpoint API in the `router.ts`.

There, you can configure communication with LLM, setting up a model you want to use. Also, this is the place for tool definition in the example provided, which shows the implementation of a function that fetches the weather by location. Full example is here.

3. Provide authentication

Add your API key to the environment file (.env), just like in this example. Without this, your app won’t be able to make requests to the LLM.

4. Define the tools UI on the frontend

The UI for each tool is written as React components. Each one describes how the result should be rendered once returned. For our weather example, the UI is written this way.

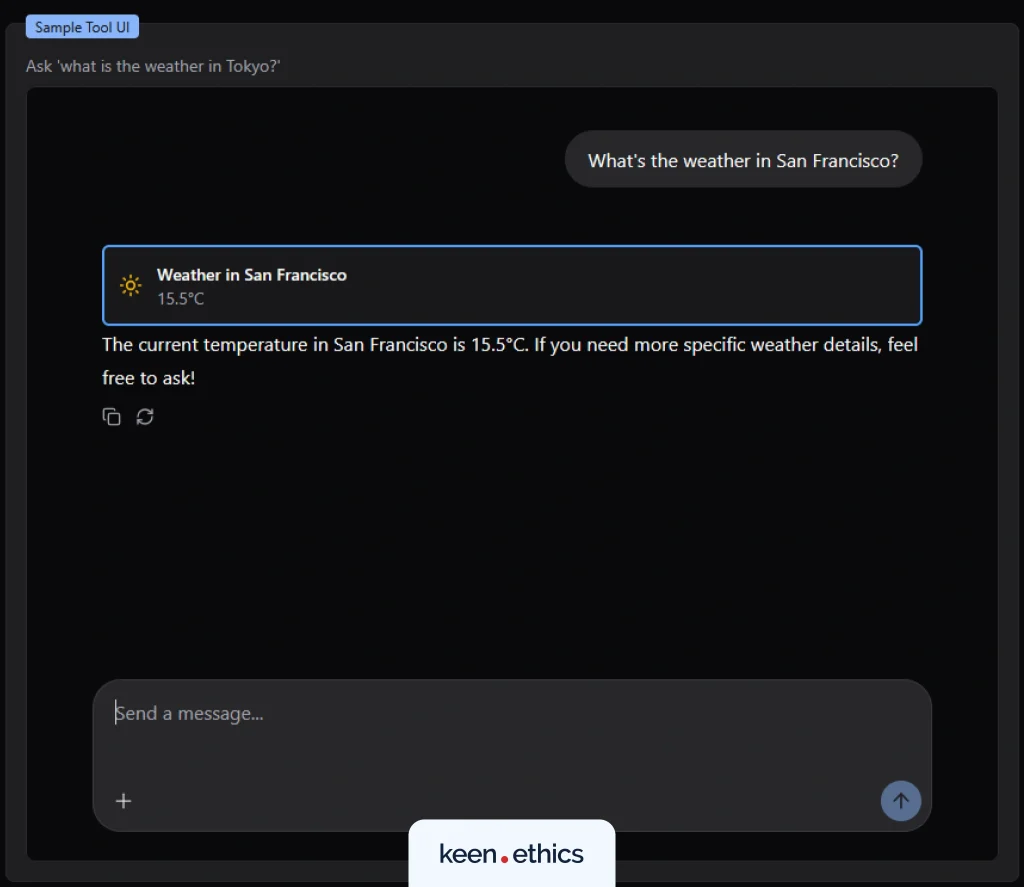

5. See it in action

Use AssistantRuntimeProvider in your app to manage tool execution and message rendering. After this provider is initialized, you can place your custom tool components anywhere in the chat flow.

When the LLM decides to call your weather tool, the result is rendered above the assistant’s message. The tool’s UI result is rendered as whatever you defined (could be a chart, a button, an HTML fragment, etc.).

At runtime, the LLM scans the available tools, invokes the appropriate one, and the Assistant UI takes care of calling it, collecting the output, and rendering the UI in place. That’s how the chat feels seamless: text mixed with live components.

A reference for rendering a forecast:

MCP-UI for rendering components

But frontend control isn’t always enough. When rendering has to happen on the server, MCP-UI shifts that responsibility to the backend. It is a library and protocol that allows the server to deliver UI components (with CSS and JavaScript) as packaged resources, while the client renders them safely inside the conversation.

The important distinction is that the LLM never generates or manipulates raw HTML. Everything is written by developers and passed forward in a controlled way.

MCP-UI supports three types of resources that a tool can return:

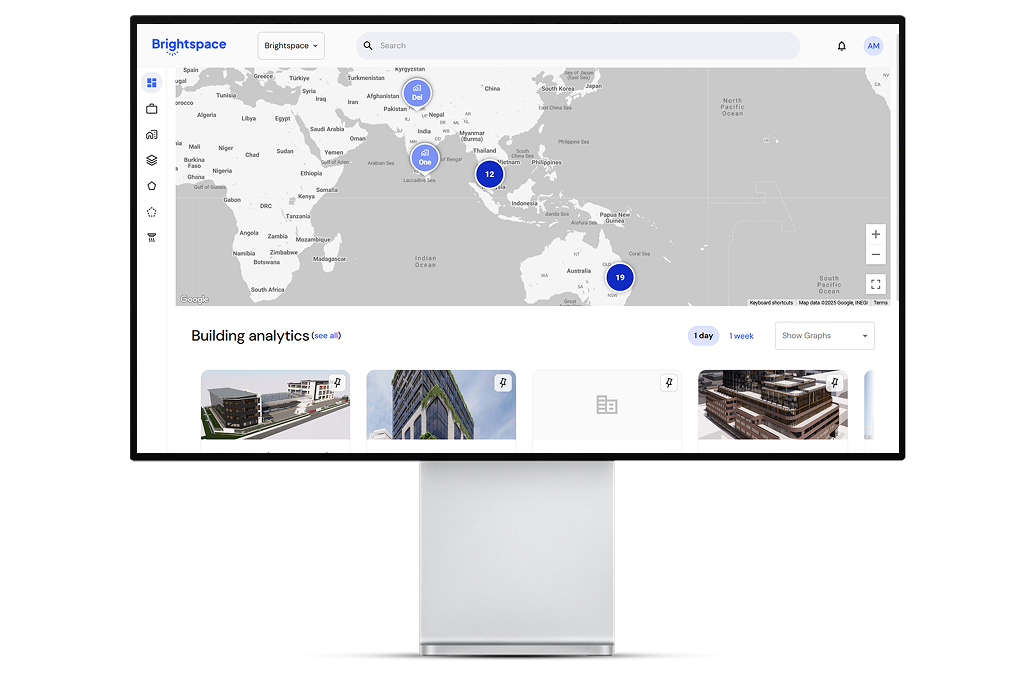

- URL resources — links to full external pages that the client loads into an iframe, letting you embed dashboards, apps, or websites inside the conversation.

- Raw HTML resources – full HTML/CSS/JS pages passed as strings, embedded directly in the tool’s response.

- Remote DOM resources – scripts that describe DOM mutations on an abstract root, which the client replays and maps to real components.

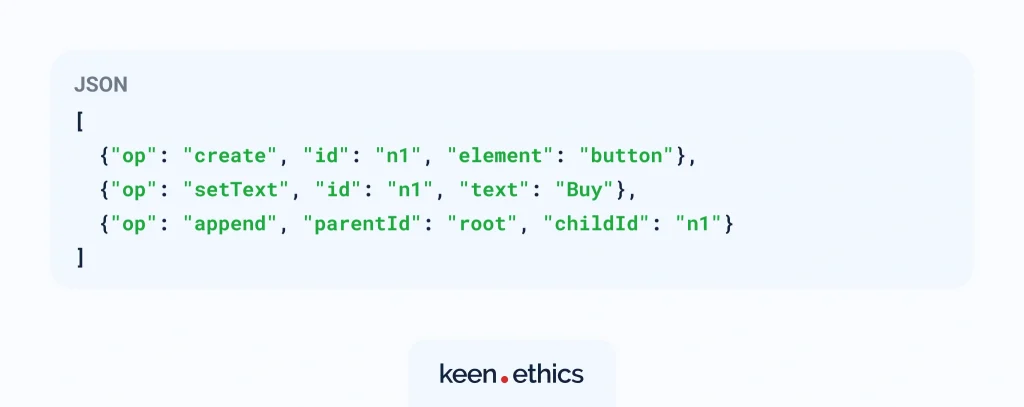

Here’s a simplified example of how a Remote DOM script describes UI:

The host receives this array of mutations and rebuilds the interface using a component mapping library that matches remote nodes to your own React components (attributes, events, etc.). You can use the base MCP-UI library or plug in your own — for example, a Radix-based mapping as shown in the official demo. A full example of the Remote DOM workflow can be found here.

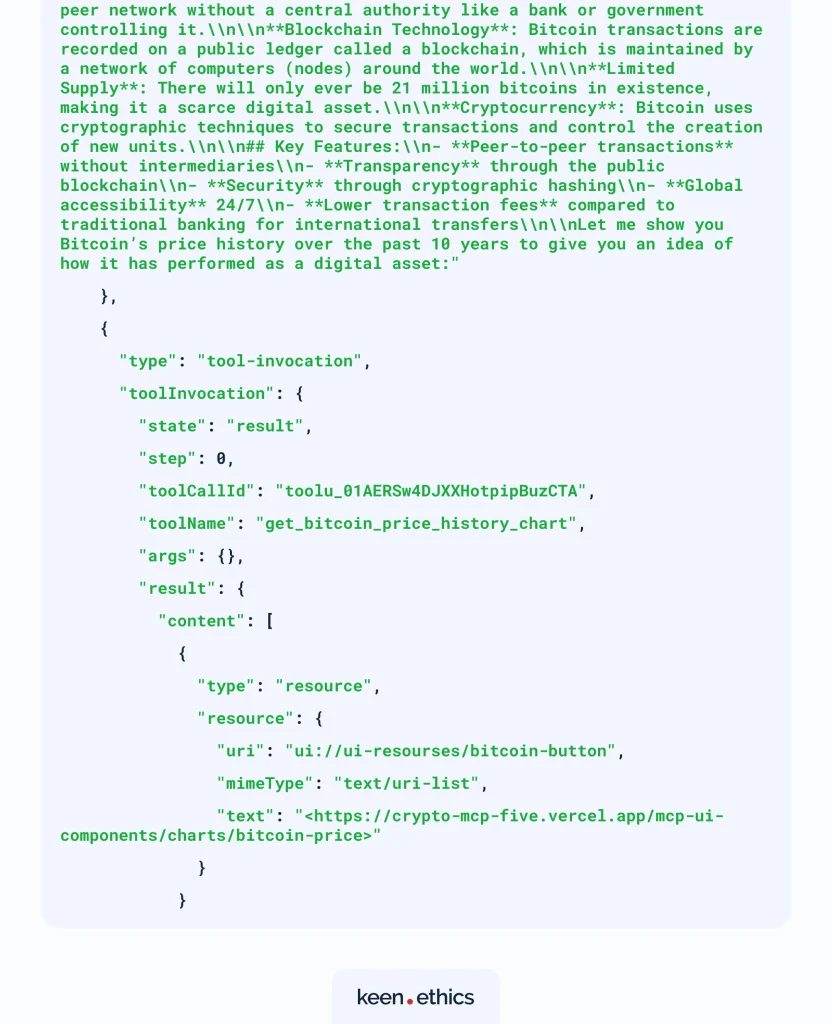

From the developer’s perspective, the workflow stays familiar, but the principle behind it is important. On the backend, the AI library’s streamText streams text and executes the tool once the model decides which one to call. You can see how this works in the /api/chat route of the scira-mcp-ui-chat repo.

On the frontend, MCP-UI takes over: resources are invoked in chat.tsx and rendered in the message.tsx component. In other words, the model’s role is to pick the tool, the AI library runs it, and MCP-UI delivers the result back into the conversation.

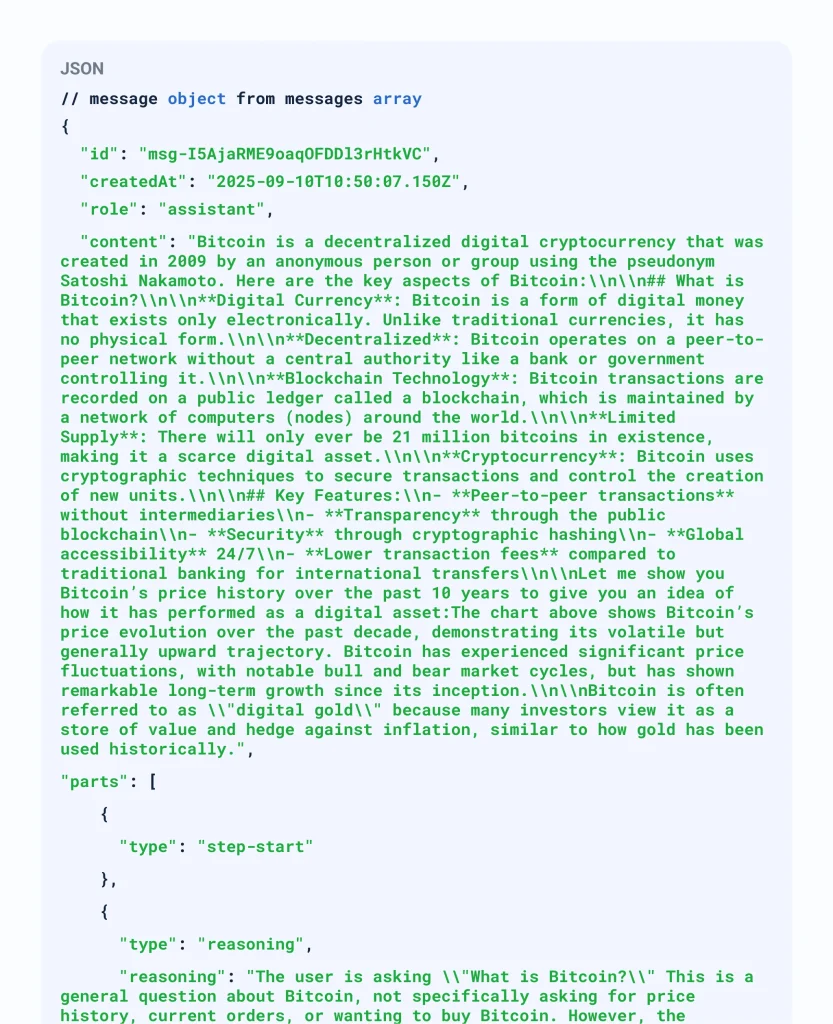

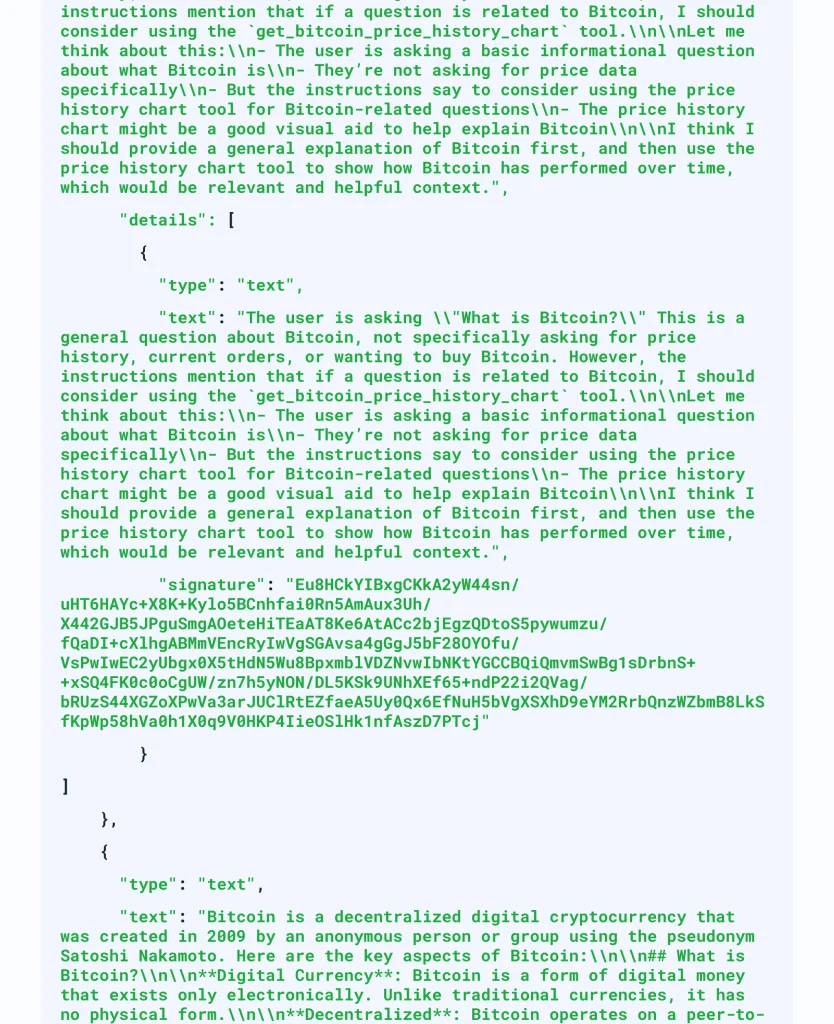

Here’s a real message object example, showing how text, reasoning, and tool invocations appear together in a single assistant reply:

“MCP-UI treats UI as a first-class resource. You don’t hack around with HTML in prompts. The UI is written by you, delivered safely by the backend, and rendered consistently in the client.” — Alexander Shcherbatov, Keenethics developer

URL resources

URL resources are often the quickest way to get something working. You return a link, and the client simply loads the page into an iframe. For developers, this means you can ship updates centrally: change the page once, and every integration gets the new version immediately. It also lets you reuse any stack you like — React, Angular, Svelte — and bring in the visuals or interactivity you already have.

But this convenience comes with infrastructure baggage, as you need to host and secure the site, monitor uptime, and deal with embedding policies like CORS or CSP. Users generally like the result because they get a live, familiar interface with search, filters, or login already built in. The fragility shows up when the site refuses to embed or slows down under external scripts and trackers.

“URL resources look simple until you deploy them. The moment you embed a page, headers like X-Frame-Options or CSP can shut it down, and you often need a proxy just to make it display.” — Alexander Shcherbatov, Keenethics developer

Raw HTML resources

Raw HTML resources feel almost opposite. Here, the entire page is returned as a string — HTML, CSS, and JavaScript bundled directly in the tool’s response. No hosting, no deployments, no uptime worries. In developer terms, you know exactly what’s sent, and you can even keep versions with metadata like ETags or timestamps.

It’s great for stable snapshots, comparisons between releases, or lightweight content that must render the same every time. But as pages grow, the payloads get heavy, and every invocation means shipping the whole thing back to the client. Latency grows, storage fills, and the content ages quickly. For the user, the upside is speed and stability, but there’s no interactivity beyond what’s baked in.

“Raw HTML is predictable, but you have to treat it like any other snapshot. It gets old fast, and without sanitization you’re inviting XSS into your chat.” — Alexander Shcherbatov, Keenethics developer

Remote DOM resources

Remote DOM resources sit in a different category altogether. Instead of sending markup, the server streams DOM mutations that the client replays in a sandboxed environment. This is how you can surface truly dynamic UIs, the kind that only appear after JavaScript runs, after a button click, or after login.

The client mirrors exactly what a user would see in a single-page app, down to personalization or infinite scroll. The catch is complexity. You’re juggling scripts, mutation queues, mapping libraries, and often dealing with cookies, sessions, or anti-bot protections. It runs slower and breaks more easily when selectors or markup change. But when interactivity is essential, nothing else comes close.

“Remote DOM delivers interactivity in a way nothing else does. Still, each mutation is mirrored back into your app, and if selectors or component mappings shift, things start to fall apart quickly.” — Alexander Shcherbatov, Keenethics developer

What this means for developers

The more we explore and develop in this space, the more evident it becomes that MCP opens new possibilities for developers. You’re no longer required to hack around JSON or force-fit model output into your own rendering layer. With this new concept, what once felt like backend wiring turns into frontend design.

The real value of MCP is that it puts design decisions back in your hands. The model decides when a tool should be used, but you decide what it looks like and how it behaves. That’s a rare balance in AI integration where the intelligence of the model meets the craft of UI engineering.

As chat interfaces spread into support, productivity, and enterprise apps, this balance between model intelligence and UI design will only grow in importance. Future iterations may reduce the overhead of handling resources, giving developers even more control with less boilerplate.

Contact us to find out more about our software development services!